In my two previous posts I evaluated a number of Automatic Speech Recognition systems and selected Google and Speechmatics as the best fit for my needs. Here, after another long gap, I’m returning with updated results and discussion, including new excellent results from Rev.ai, 3Scribe and AssemblyAI.

For this evaluation I’m using the same method and the same twelve audio clips as I described in my previous post.

Results

The table below presents the results. The first column includes the approximate date of the ASR processing in YYYY-MM format. The rows are ordered by the median of their WER scores across all 12 files. Each cell is color coded according to the degree to which the WER score is better (lower, deeper green) or worse (higher, deeper red) than the median of this set of results for that file.

| Service | Median | F10 A41 |

F11 A97 |

F13 B52 |

F14 C18 |

F14 C42 |

F15 C96 |

F16 D64 |

F17 D83 |

F17 E03 |

F18 E82 |

F18 E83 |

F18 E84 |

| 3Scribe 2020-05 $7.8/hr |

9 | 14 | 8 | 8 | 6 | 8 | 7 | 12 | 9 | 12 | 14 | 9 | 11 |

| Rev.ai 2020-04 $2.1/hr |

11 | 18 | 12 | 10 | 7 | 9 | 7 | 14 | 9 | 12 | 17 | 9 | 12 |

| AssemblyAI 2020-05 $0.9/hr |

11 | 20 | 10 | 11 | 9 | 10 | 10 | 14 | 10 | 13 | 16 | 11 | 12 |

| Google 2019-07 |

12 | 21 | 9 | 9 | 8 | 11 | 8 | 12 | 9 | 12 | 23 | 14 | 14 |

| Google 2020-02 $1.4/hr |

12 | 20 | 10 | 10 | 9 | 11 | 9 | 12 | 10 | 13 | 22 | 13 | 14 |

| Speechmatics 2018-12 |

12 | 19 | 11 | 10 | 9 | 11 | 9 | 16 | 11 | 13 | 19 | 14 | 12 |

| Rev.ai 2019-07 |

12 | 21 | 12 | 11 | 7 | 12 | 8 | 17 | 10 | 14 | 18 | 11 | 14 |

| Speechmatics 2020-04 $4.4/hr |

12 | 18 | 11 | 10 | 9 | 12 | 9 | 16 | 10 | 13 | 19 | 14 | 12 |

One of the few benefits of taking years to work through this process is that I can see how ASR results for a service change over time. While Google’s and Speechmatic’s score dropped a little, Rev.ai has significantly improved. 3Scribe and AssemblyAI are newcomers to my testing.

The prices shown are the approximate USD cost per hour, ignoring any free tier or bulk discounts.

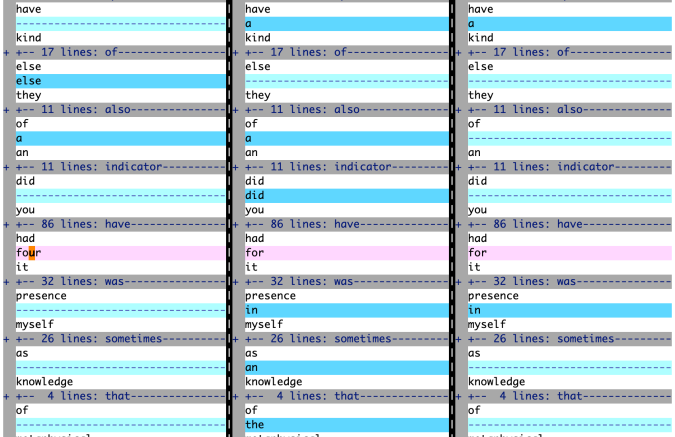

It’s important to note that these results are all very good. The nature of the informal testing I’m doing means there’s really little value in distinguishing between small differences in WER scores. At this level the scores are significantly affected by differences in how “verbatim” the systems try to be, such as when a speaker hesitates and repeats a word or two. For example, here’s a section of vimdiff showing Google, Rev.ai, and Speechmatics making different choices:

The effect of actual transcription errors on the WER score has become less significant and I don’t have the time to sift through which differences are significant or not. I’m content these services are all good enough for my needs.

Google’s score had an insignificant drop (-0.1) since July last year. The problem in my previous test, where the transcript of the F18.E82 clip was missing a chunk of text, was still present.

Speechmatics

When I submitted each audio file to Speechmatics a pop-up alert said “Duplicate file. You already have a job that used a file with this name. Are you sure you want to select it again?” I said yes. Speechmatics uploaded the file, took some time to transcribe each one, and charged me for the service. When I downloaded the transcripts I found that they were identical to the previous transcripts generated in 2018. This seemed suspicious so I edited an audio file to remove a tiny moment of silence and tried again. This time the transcript was different, so I did the same for all the other files. That sure seems like a bug.

Speechmatics score has dropped since December 2018. It’s a slightly larger drop (-0.3) than Google’s but still small.

Rev.ai

Rev.ai is a newcomer to my testing. Jay Lee, the General Manager of speech services at Rev.com, contacted me in July, prompted by my previous blog post. Rev.ai is the enterprise version of their ASR API. We had a call where we talked over the project, my methods, the results. Full disclosure: Jay very kindly donated enough minutes of Rev.ai time to cover my needs for this project.

I tested their Rev.ai service in July and the results were good then. They’re even better now (+0.9).

3Scribe

As I was drafting this post Eddie Gahan from 3Scribe contacted me with an invitation to try out their new service. Their scores are impressive. They have an API but don’t yet offer features like word-level timings or confidence scores. They’re one to watch. I wish them well, not least because they’re an Irish company.

AssemblyAI

I’d overlooked AssemblyAI thus far. They only offer an API interface, though it’s simple to use and well documented. They don’t provide speaker diarisation, but do provide excellent results at an excellent price, with punctuation and word level timing and confidence. Their free tier is 300 minutes/month.

Differential Analysis

Each service has a relatively high WER score when using the transcript from one of the others as the ground truth. This is good. It means the services are making different mistakes/decisions and those differences could be used to highlight likely errors in the others.

Diversions

A couple of issues diverted me for a while.

Exploring the Parameter Space of the Google API

Unlike the other services, the Google API offers many configuration options which provide “information to the recognizer that specifies how to process the request”. There’s an implication, to me at least, that providing more detailed configuration options could result in more accurate transcriptions. But which ones would have a significant effect?

I picked a number of parameters and ran transcriptions with various combinations of likely-looking parameter values. I was especially hopeful that specifying a NAICS code for the topic of the podcast would have a positive effect. To cut a long story short, nothing made a significant difference, except providing a vocabulary. I suspect Google may use the configuration details provided by the users to help train their system.

Extra Vocabulary

Both the Google and Rev.ai APIs provide a way to improve transcription accuracy by specifying extra words and phrases.

Google’s SpeechContext has phrases: “A list of strings containing words and phrases ‘hints’ so that the speech recognition is more likely to recognize them. This can be used to improve the accuracy for specific words and phrases” There’s also an integer boost value: “Positive value will increase the probability that a specific phrase will be recognized over other similar sounding phrases. The higher the boost, the higher the chance of false positive recognition as well. We recommend using a binary search approach to finding the optimal value for your use case.”

Rev.ai describe custom_vocabularies like this: “An array of words or phrases not found in the normal dictionary. Add your specific technical jargon, proper nouns and uncommon phrases as strings in this array to add them to the lexicon for this job.”

Specifying a vocabulary of extra words seemed like a very appealing way to improve the transcription accuracy. Certainly worth spending some time exploring. I needed a list of words that the transcriptions tended to get wrong. For each file I compared the ground truth transcript with all the ASR generated transcripts and extracted a list of all the words the ASRs had got wrong, regardless of circumstances. I called this a ‘commonly wrong words’ list.

I tried Google first, submitting jobs with the commonly wrong words and various boost levels. A boost level of 3 had the best effect, reducing the WER from around 11.5 to 9.5. An impressive gain!

Then I tried the same with Rev.ai. This time the results got worse. I was rather puzzled and disappointed. I contacted Rev and they kindly arranged a meeting to explain how the feature worked. My take-aways were that it’s useful for specific terms and especially phrases that are not already known to their system. That my “shotgun” approach, using lots of individual words, wasn’t a good use. And that it’s hard to predict the effect.

Later on it dawned on me that my ‘commonly wrong words’ approach was simply not valid. I was effectively cheating by strongly hinting to Google what words it had got wrong. Moreover it would not be possible to automatically generate a suitable list of words and phrases for each audio file to be transcribed. The closest viable approach might be to extract unusual words and phrases, such as uncommon names, from podcast show notes. I may return to exploring the creation and use of a custom vocabulary later, but for now I’m shelving it.

Other ASR Services Using Rev?

When talking to Rev.ai they mentioned Fireflies.ai. (A service which simplifies recording and transcription of business meetings. You invite their bot, called Fred, to join the meeting via your calendar app and the rest is automatic.) Fireflies use Rev.ai as the ASR. I tried them out and was puzzled to see some results were better than Rev.ai’s.

That reminded me of Descript who, when I tested them previously, were using Google as the ASR yet had some results that were better than Google’s.

It seems there are two factors at play: pre-processing of the audio before it’s sent to the ASR, and post-processing of the raw ASR results.

Here’s a comparison of results from Fireflies.ai, Rev.ai, and Descript:

| Service | Median | F10 A41 |

F11 A97 |

F13 B52 |

F14 C18 |

F14 C42 |

F15 C96 |

F16 D64 |

F17 D83 |

F17 E03 |

F18 E82 |

F18 E83 |

F18 E84 |

| Fireflies 2020-04 | 10 | 18 | 10 | 9 | 8 | 10 | 7 | 15 | 9 | 13 | 15 | 9 | 13 |

| Rev.ai 2020-04 | 11 | 18 | 12 | 10 | 7 | 9 | 7 | 14 | 9 | 12 | 17 | 9 | 12 |

| Descript 2020-04 | 12 | 20 | 12 | 11 | 7 | 11 | 7 | 16 | 9 | 12 | 17 | 9 | 13 |

I’ve included Descript because the differential WER scores between the three are low. Specifically the WER between Descript and Rev.ai is half of that between Descript and Google, suggesting that Descript is using Rev in their ASR process.

It’s interesting that Fireflies.ai did especially well on the three oldest files, with relatively lower quality audio, and the more recent F18.E82 file that had clipping. It made me wonder if I should experiment with some audio pre-processing of my own but, after spending 4 years getting this far, I’ll pass!

Revisiting The Past

I thought it would be interesting to revisit the 2 hour audio file I used in the first of my ASR comparison posts, back in May 2018.

The figures in the Sentences, Commas, and Questions columns are the number of full-stop, comma, and question mark characters in the transcript. The figures in the Names column are a rough approximation to the number of Proper Nouns.

| Service | WER | Sentences | Commas | Questions | Names |

|---|---|---|---|---|---|

| Human range low – high |

4.10 — 5.10 |

840 — 1261 |

1450 — 1748 |

49 — 76 |

1056 — 1208 |

| Rev.ai 2020-05 |

8.48 | 731 | 1383 | 59 | 884 |

| 3Scribe 2020-05 |

8.71 | 688 | 664 | 67 | 995 |

| AssemblyAI 2020-05 |

9.34 | 805 | 643 | 69 | 996 |

| Speechmatics 2020-05 |

9.61 | 667 | 0 | 0 | 931 |

| Google 2018-04 |

10.03 | 641 | 421 | 29 | 1232 |

| Google 2020-05 |

10.36 | 462 | 238 | 20 | 1325 |

| Speechmatics 2018-02 |

11.65 | 672 | 0 | 0 | 892 |

The Rev.ai results are particularly impressive. Beyond the good WER score they also come remarkably close to the Human transcripts in terms of identifying sentences, commas and questions. As do 3Scribe and AssemblyAI. Accurate recognition of sentences and especially questions should be helpful in segmenting the transcript into topics.

Over the last two years Google’s results have got slightly worse and Speechmatics have improved enough to jump ahead of them. (The 2018 figures for Google and Speechmatics don’t exactly match those in my earlier post due to small changes in my analysis scripts, such as recognizing more compound words.)

Conclusions

For my needs, on this project, Rev.ai have the best results. Their kind donation of time credits is the icing on the cake. AssemblyAI lacks speaker identification. Speechmatics has a better WER score than Google but doesn’t recognise questions. All except 3Scribe have word-level timing and confidence indicators.

It’s time for me to start doing some bulk processing. Finally.

I’ll start with a few recent 2-hour podcast audio files. Transcribe them via the Rev.ai API. Then process the transcripts from the raw JSON returned by the API into various formats including Markdown and basic HTML. Once I’ve a pipeline setup I’ll start working backwards through older episodes. Then I’ll iterate on whatever extra features seems most interesting at the time. Probably starting with search, and probably using Elasticsearch.

Remember, these are just my results for this project and with these specific audio files and subject matter. Your mileage will vary. Work out what features you need and do your own testing with your own audio to work out which services will work best for you. Have fun.

Updates:

- 22nd May 2020: Added results for AssemblyAI.

- 23rd May 2020: Added the approximate cost per hour for the services.

Pingback: A Comparison of Automatic Speech Recognition (ASR) Systems | Not this…

Pingback: A Comparison of Automatic Speech Recognition (ASR) Systems, part 2 | Not this…