In my two previous posts I evaluated a number of Automatic Speech Recognition systems and selected Google and Speechmatics as the best fit for my needs. Here, after another long gap, I’m returning with updated results and discussion, including new excellent results from Rev.ai, 3Scribe and AssemblyAI.

A Comparison of Automatic Speech Recognition (ASR) Systems, part 2

In my previous post I evaluated a number of Automatic Speech Recognition systems. That evaluation was useful but limited in an important way: it only used a single good quality audio file with a single pair of speakers (who both happened to be males with clear North American accents). Consequently there was no evaluation of performance across a variety of accents and varying audio quality etc.

To address that limitation I’ve tested 14 ASR systems with 12 different audio files, covering a range of accents and audio quality. This post presents the results.

A Comparison of Automatic Speech Recognition (ASR) Systems

Back in March 2016 I wrote Semi-automated podcast transcription about my interest in finding ways to make archives of podcast content more accessible. Please read that post for details of my motivations and goals.

Some 11 months later, in February 2017, I wrote Comparing Transcriptions describing how I was exploring measuring transcription accuracy. That turned out to be more tricky, and interesting, than I’d expected. Please read that post for details of the methods I’m using and what the WER (word error rate) score means.

Here, after another over-long gap, I’m returning to post the current results, and start thinking about next steps. One cause of the delay has been that whenever I returned to the topic there had been significant changes in at least one of the results, most recently when Google announced their enhanced models. In the end the delay turned out to be helpful.

Comparing Transcriptions

After a pause I am working again on my semi-automated podcast transcription project. The first part involves evaluating the quality of various methods of transcription. But how?

In this post I’ll explore how I’ve been comparing transcripts to evaluate transcription services. I’ll include the results for some human-powered services. I’ll write up the results for automated services in a later post.

Semi-automated podcast transcription

The medium of podcasting continues to grow in popularity. Americans, for example, now listen to over 21 million hours of podcasts per day. Few of those podcasts have transcripts available, so the content isn’t discoverable, searchable, linkable, reusable. It’s lost.

The typical solution is to pay a commercial transcription service, which charge roughly $1/minute and claim around 98% accuracy. For a podcast producing an hour of content a week, that would add an overhead of around $250 a month. A back catalogue of a year of podcasts would cost over $3,100 to transcribe.

When I remember fragments of some story or idea that I recall hearing on a podcast, I’d like to be able to find it again. Without searchable transcripts I can’t. It’s impractical to listen to hundreds of old episodes, so the content is effectively lost.

Given the advances in automated speech recognition in recent years, I began to wonder if some kind of automated transcription system would be practical. This led on to some thinking about interesting user interfaces.

This (long) post is a record of my research and ponderings around this topic. I sketch out some goals, constraints, and a rough outline of what I’m thinking of, along with links to many tools, projects, and references to information that might help. I’ve also been updating it as I’ve come across extra information and new services.

I’m hoping someone will tell me that such a system, or parts of it, already exist so that I can contribute to those existing projects. If not then I’m interested in starting a new project – or projects – and would welcome any help. Read on if you’re interested… Continue reading

Introducing Data::Tumbler and Test::WriteVariants

For some time now Jens Rehsack (Sno), H.Merijn Brand (Tux) and I have been working on bootstrapping a large project to provide a common test suite for the DBI that can be reused by drivers to test their conformance to the DBI specification.

This post isn’t about that. This post is about two spin-off modules that might seem unrelated: Data::Tumbler and Test::WriteVariants, and the Perl QA Hackathon that saw them released.

Migrating a complex search query from DBIx::Class to Elasticsearch

At the heart of one of our major web applications at TigerLead is a property listing search. The search supports all the obvious criteria, like price range and bedrooms, more complex ones like school districts, plus a “full-text” search field.

This is the story of moving the property listing search logic from querying a PostgreSQL instance to querying an ElasticSearch cluster. Continue reading

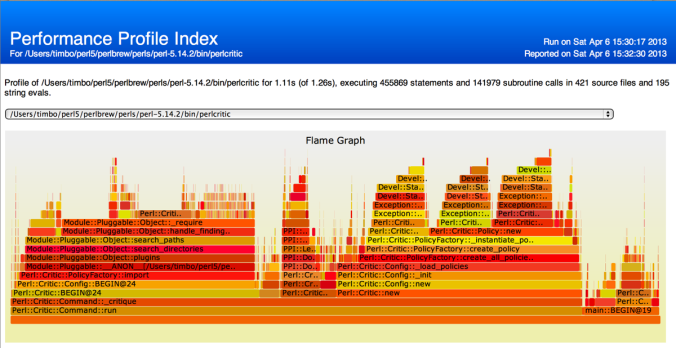

NYTProf v5 – Flaming Precision

As soon as I saw a Flame Graph visualization I knew it would make a great addition to NYTProf. So I’m delighted that the new Devel::NYTProf version 5.00, just released, has a Flame Graph as the main feature of the index page.

In this post I’ll explain the Flame Graph visualization, the new ‘subroutine calls event stream’ that makes the Flame Graph possible, and other recent changes, including improved precision in the subroutine profiler. Continue reading

Suggested Alternatives as a MetaCPAN feature

I expressed this idea recently in a tweet and then started writing it up in more detail as a comment to Brendan Byrd’s The Four Major Problems with CPAN blog post. It grew in detail until I figured I should just write it up as a blog post of my own. Continue reading

Introducing Devel::SizeMe – Visualizing Perl Memory Use

For a long time I’ve wanted to create a module that would shed light on how perl uses memory. This year I decided to do something about it.

My research and development didn’t yield much fruit in time for OSCON in July, where my talk ended up being about my research and plans. (I also tried to explain that RSS isn’t a useful measurement for this, and that malloc buffering means even total process size isn’t a very useful measurement.) I was invited to speak at YAPC::Asia in Tokyo in September and really wanted to have something worthwhile to demonstrate there.

I’m delighted to say that some frantic hacking (aka Conference Driven Development) yielded a working demo just in time and, after a little more polish, I’ve now uploaded Devel::SizeMe to CPAN.

In this post I want to introduce you to Devel::SizeMe, show some screenshots, a screencast of the talk and demo, and outline current issues and plans for future development. Continue reading