This is the back story to the development of NYTProf v2.

Earlier this year (2008) I needed to do some performance profiling of the source code of a large application. Like many perl developers, my first instinct was to try using Devel::DProf (actually the Apache::DProf wrapper as it was a mod_perl application). It was not a great experience.

DProf

DProf seems easily confused by unusual control flow, spewing “… has unstacked calls” warnings. Also, the subroutines it said were taking the most time didn’t make sense to me. Eventually I worked out that Devel::DProf is effectively broken.

The application I was trying to profile has quite a few large subroutines, so knowing just the time spent in the subroutine as a whole didn’t help much for those. I wanted to know where in the subroutine the time was being spent.

FastProf

That led me to look at line-based profilers. At the time there as only one worth looking at, Devel::FastProf by Salvador Fandiño García (which was based on Devel::SmallProf by Ted Ashton).

Line profilers spit out a stream of “file id, line number, time spent” records to a file. The time between starting one statement and starting the next is measured and associated with the line number of the statement.

Devel::FastProf was great. Fast and effective. It gave me far more accurate timings, and when I made changes in the code it highlighted I could see an immediate effect on performance.

Devel::FastProf was great, but I wanted more. The lack of subroutine level timing was frustrating. When you have a ~100,000 lines of code, knowing the time spent on each, and how many times it was executed, is less useful than you may think – there’s just too much detail. Especially when looking for structural problems in the code, or for good places to add caching, or pass extra information down a call chain to save expensive calls deeper in the code. There’s a need for both subroutine and line level timings when profiling.

Idea

I’d had an idea: The line number output in the FastProf profile need not be the line number of the statement. It could output the line number of the subroutine containing the statement. Then you’d automatically get subroutine level timings! Simple.

Then I wondered if it was possible to find the line number of the block the statement was in. That would give block level timing! A first for any perl profiler.

My perl internals knowledge was getting rusty as it was a few years since I’d been Pumpkin for the 5.4.x release, so I asked the wizards on perl5-porters. They gave me hope and enough clues to get going.

Salvador kindly moved the Devel::FastProf code to a public svn, so I could contribute more easily, and I started hacking. I added code to find the nearest enclosing block or sub and it proved very useful.

When I’m optimizing I start by identifying “locally inefficient code”. That is, code you can optimize without significant structural changes. Without making changes outside the subroutine. Moving code outside loops is a common example. Subroutine timings identify the hot subs, then block and line timings pinpoint the hot spots in the code.

That’s the low hanging fruit. Easy pickings, and often very effective. But there’s a limit to how far that’ll take you.

Callers and Callees

There are two ways to optimize a hot piece of code: make it faster, or execute it less often. The former tends to get much more attention than the latter. It’s important to remember to keep stepping back. To keep looking for the bigger picture.

When I’m optimizing I often use a well defined chunk of work, like 10 requests to the same URL, so I can see of the number of times a subroutine is called “feels right”. That often shows subs being called “too often”. But what then? You need to know why the sub is being called too often, so you need to know where it’s being called from.

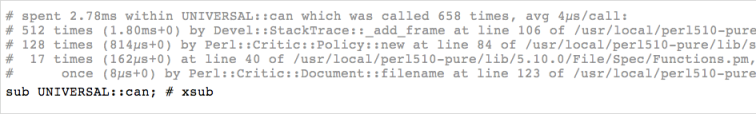

In Devel::FastProf I added counting of subroutine callers two-levels up the call stack. So I could see that foo() was called 10 times by bar() and that of those 10 calls from bar() 7 has come from baz() and 3 from boo(). It was very simplistic, slow (implemented in perl) and only had counts, not timings, but proved very useful.

Using these additions to Devel::FastProf I reduced the CPU usage of the application by over 40%. Not bad. (I could see another 10% or so to be gained fairly easily but had to draw the line somewhere.)

NYTProf

Meanwhile Adam Kaplan had forked Devel::FastProf, added a test harness and tests, made some internal improvements, grafted on an html report derived from Devel::Cover, and released it as Devel::NYTProf.

When I saw NYTProf I switched to working on that. Again, Adam was kind enough to move the code to a public svn repository. I was attracted not so much by the html report as by the test harness. A lesson to anyone wanting to attract developers to an open source project!

Testing profilers is hard and Adam had come up with a good basic testing framework which was easy to extend. NYTProf now has a strong test suite that profiles 19 different perl scripts with four different combinations of profiler options. The test suite has proven invaluable in identifying regressions during development, and for identifying portability issues between perl versions.

I re-implemented the block/sub level profiling and the subroutine caller tracking from FastProf in NYTProf, but with more care, more attention to performance, and now tests.

I was particularly pleased with the subroutine caller tracking. It intercepts the entersub opcode and uses the save stack for storage and to trigger a ‘destructor’ call to end the timing when the subroutine is exited by any means. The end result is an extremely fast and robust subroutine call profiler. I plan to add an option to disable the other profiler so you can just get subroutine profile details when you don’t need statement level details. It currently lacks the ability to give exclusive times but I think I’ve an efficient solution for that. (Update: Implemented in r340 and r343 so will be in the 2.02 release.)

Accuracy

Another key innovation was to fix a fundamental problem inherent in all statement profilers. Consider a statement that calls a subroutine and then performs some other work that doesn’t execute new statements, for example:

foo(...) || mkdir(...);

In all other statement profilers the time spent in remainder of the expression (mkdir in the example) will be recorded as having been spent on the last statement executed in foo()! Here’s another example:

while (<>) {

...

1;

}

After the first time around the loop, any further time spent evaluating the condition (waiting for input in this example) would be be recorded as having been spent on the last statement executed in the loop!

I fixed this in NYTProf by intercepting all the opcodes which indicate that control is returning into some previous statement and adjusting the profile accordingly.

Reporting

As much effort, if not more, went into the reporting side of the code. And there’s a lot more to be done there. My goal is to keep growing the data model classes to the point where any reporting code can get the information it needs easily enough that there’s no longer a need for the rather limiting Reporter class.

I’d like to see a single ‘nytprof’ command line tool that loads a class to generate the report. That would replace nytprofhtml and nytprofcsv. That would make it easy for other developers to release ‘nytprof reporting modules’ to CPAN.

For example, one very useful report that FastProf has but NTProf currently lacks is a list of most expensive lines (or blocks, or subs) output in the format used by compiler error messages. The format is important because most editors have a special mode for reading such files that means you can hop from one ‘most expensive line’ to the next with a single key stroke. (For vim that’s called quickfix mode.) That’s a wonderful way to browse the hotspots and make edits on the spot.

Future

There are many, many, ways NYTProf can be enhanced further. As I’ve worked on it I’ve dumped ideas, issues and random notes into the HACKING file.

Thanks

I’d like to end by expressing my thanks to Salvador Fandiño García and especially Adam Kaplan for allowing me to contribute to the modules they created and tolerating my strong ideas with understanding. Thank you both. It’s been quite a ride.

The next thing you may notice is that statement timings are now nicely formatted with units. Gisle Aas contributed the formatting code for 2.07 but I had to do some refactoring to get it working for the statement timings.

The next thing you may notice is that statement timings are now nicely formatted with units. Gisle Aas contributed the formatting code for 2.07 but I had to do some refactoring to get it working for the statement timings.

I spent a very pleasant few days in Pisa, Italy, last week. I’d been invited to speak at the

I spent a very pleasant few days in Pisa, Italy, last week. I’d been invited to speak at the