My first Open Source spin-off from TigerLead.com: UltraDNS a Perl client API for UltraDNS.

Author: TimBunce

Can you reproduce this NYTProf failure?

I’ve a new release of NYTProf ready to upload but I’m stuck.

The CPAN Testers service is reporting a failure on a number of systems but I can’t reproduce it locally or work out the cause.

Can you reproduce the failure with Devel::NYTProf 2.07_94? If so, could you give me remote access via ssh? (Or spare some time to investigate yourself – I’ll happily clue you in if you can reproduce the problem.)

Update: No one could reproduce it. It seems that the failures was not what it appeared to be. A clue was that only one tester was affected. Devel-NYTProf-2.07_94.tar.gz unpacked itself into a directory called Devel-NYTProf-2.07. It seems that when using CPANPLUS if the user had already got an old Devel-NYTProf-2.07 directory its contents got merged and tests would fail. I’m not convinced that’s the whole story, but Devel-NYTProf-2.07_95.tar.gz unpacked into a Devel-NYTProf-2.07_95 directory and didn’t run into the problem.

Update: More usefully, Andreas made my wish come true by pointing out the –solve parameter to the ctgetreports utility in his CPAN::Testers::ParseReports distribution. It “tries to identify the best contenders for a blame using Statistics::Regression. […] The function prints the […] top 3 candidates according to R^2 with their regression analysis.” Cool.

Crouching Tiger

After almost exactly two years working for Shopzilla I’ve moved on.

I’ve enjoyed my time with Shopzilla, met some great people, and worked on some challenging problems. I’m especially grateful to them for giving me the opportunity to develop and release some very useful Open Source software: Gofer, DashProfiler, and especially NYTProf v2.

When I started with ValueClick back in 1999 it was a small company. Over the years I was with them it grew rapidly, both from within and via mergers. When I left in 2006 it had become a very large company. For me Shopzilla was always a very large company.

I really like to make a significant positive impact where I work. I believe I did that at ValueClick and Shopzilla, but it’s always easier at a smaller company. There’s far less inertia to overcome.

So I’m delighted to be working now at a small company with a great team, product, technology, approach, and growth: TigerLead.com

I’m not keen on job titles, but I needed to put something on my LinkedIn profile. After exploring some options I settled on “Entropy Minimizer”!

I’m also delighted to be working with the PostgreSQL database, at last, as I’ve not had a chance to work with it before. (Although I used to be good at tuning Ingres, the distant forerunner of PostgreSQL, back in the days when RDBMS were novel and we thought a 50MHz cpu was fast.)

Dopplr vs Tripit

When arranging trips I used to manually enter the main details (dates, times, confirmation numbers, hotel addresses and phone numbers etc.) into iCal and forward confirmation emails to my wife so she’d have a copy if she needed them.

Then I discovered Tripit. Now I simply forward confirmation emails as they arrive to plan@tripit.com – something I could easily automate. My iCal automatically sync’s with a feed from tripit that has much more information than I’d ever enter manually, including links back to tripit.com for the full details. That then syncs to my iPhone so the information is always at hand. Perfect, and perfectly simple.

As I’ve found the service very useful over many months and many trips, I recently sent tripit invites to a bunch of people, mainly Perl folk. Many joined. A few pointed out that they already used dopplr and wondered if tripit was the same. I didn’t know, so I took a look.

I signed up with dopplr and was happy to see that, like tripit, I could forward confirmation emails to dopplr. So I forwarded the flight and hotel emails for my upcoming London Perl Workshop trip. Just like tripit, dopplr parsed the emails and worked out I was making a flight from Shannon to London and staying in a hotel in London.

That was all it did, though. It hadn’t noted my confirmation codes, flight times, hotel address. It had remembered nothing that would be useful to me.

All it had done was noted that I’d be in the same city as a few other people I know who use dopplr. tripit does that, but also let’s me control who sees which trips and who can collaborate with me on trips.

I did a quick search and found another review that reached a similar conclusion. It seems that dopplr are better at publicity, but tripit has the features I find genuinely useful.

To give it tripit a whirl, all you need to do is forward some recent confirmation emails to plan@tripit.com – you don’t even need to signup first.

NYTProf 2.07 – Even better, and now for Windows too

NYTProf development rolls on, with 2.07 being another significant release.

Here’s a summary of highlights from the many improvements.

Windows, at last

Windows wizard Jan Dubois has contributed patches that enable NYTProf to run on Windows. Many thanks Jan!

Corrected Subroutine Total Time

A significant error in time reported as spent in a subroutine has been fixed. It was showing the sum of the inclusive and exclusive time instead of just the inclusive time.

String Evals

One of NYTProf’s innovative features is tracking of subroutine calls by calling location. In earlier versions you couldn’t see calls made from within string evals. Now you can. Even for evals within evals:

That’s a big step forward for profiling code that makes heavy use of evals.

(The use of the µ micro symbol is also new – part of some helpful interface polishing contributed by Gisle Aas. They’re not used on the statement timings yet, but will be at some point.)

Recursion

Previously the ‘inclusive time’ reported for a subroutine wasn’t useful for subroutines called recursively. The overall inclusive time included the inclusive time of nested calls, typically inflating it beyond usefulness.

Now the inclusive time only measures the inclusive time of the outermost calls. NYTProf now also tracks and reports the maximum call depth for each calling location.

Disabling the Statement Profiler

NYTProf started life as a statement profiler. I added a subroutine profiler later and was delighted with how well that worked out. The subroutine profiler is novel in a few ways and also extremely robust and efficient. Part of the efficiency comes from not streaming data to disk while the program is running. It accumulates the counts and timings in memory.

When profiling large and/or performance sensitive applications the costs of NYTProf’s statement profiler, in performance impact and disk space, can be prohibitive. So now I’ve added an option to disable the statement profiler. Setting the stmts=0 option turns NYTProf into a very lightweight subroutine profiler.

Caller Information for XSubs

NYTProf reports time spent in subroutines broken down by calling location. It’s one of its key features. The caller details are embedded as comment annotation into the report for each perl source code file.

That has worked very well for perl subs, but there’s no natural home for XS subroutines because there was no source code file available to annotate.

There’s a partial solution included in NYTProf 2.07: XS subs that are in the same package as profiled perl subs, get annotated subroutine ‘stubs’ appended to the report for the corresponding perl source file.

It works quite well, in particular the common UNIVERSAL methods get annotated:

Also, now these subs have a place in the reports, any references to them are hyper links, naturally.

Sadly it doesn’t work so well for packages that don’t contain any perl subs, like DBI::st. I plan to add per-package report pages for them in a future release.

Clock Docs

I’ve greatly expanded the description of the various clocks available for profiling: POSIX real-time clocks, gettimeofday(), and Time::HiRes.

I’ve also added a caveats section that discusses issues with SMP systems, processor affinity, and virtual machines.

Feedback Wanted

How has NYTProf helped you optimize your applications? Let me know.

Perhaps as blog posts of your own, linking back here, or comments here, or CPAN ratings, or email to the mailing list or just to me. I’d love to hear from you.

Thanks!

NYTProf 2.05 – assorted fixes

Hot on the heels of NYTProf 2.04, I’ve just released NYTProf 2.05. It has a few small but significant bug fixes. From the Changes file:

Fixed alteration of $! during profiling, thanks to Slaven Rezic.

Fixed clock_gettime() clock selection to fallback to CLOCK_REALTIME

if CLOCK_MONOTONIC is not available, e.g, on linux 2.4.

Fixed error when application ends after DB::disable_profile().

Added some docs to Devel::NYTProf::Apache

Added clock=N option to enable user to select the clock.

All the bugs were long-standing ones that happened to come to light and get fixes after 2.04 was released.

The first was the cause of a couple of odd bugs were applications would behave differently when profiled. The problem was that in certain situations the profiler would alter the value of $! between statements, so code that tested for $! would think something had gone wrong.

The second caused weird timing values on some platforms. The statement times would be zero and the subroutine times would be garbage. The problem was that some systems, such as Linux 2.4, define the CLOCK_MONOTONIC macro but don’t implement that clock in the kernel. NYTProf wasn’t testing that the clock worked. Now it does. If the clock_gettime() call fails with the selected clock it falls back to CLOCK_REALTIME (and if that fails NYTProf aborts).

As a bonus I’ve added the ability to select what clock you want to use by setting clock=N in the NYTPROF environment variable, where N is the integer id of the clock you want to use (usually you can find the CLOCK_* macros in the /usr/include/time.h file). This only works if your system supports clock_gettime(), which most modern unix-like systems do, with the notable exception of OS X.

Why would you want to use a different clock?

Well the default, CLOCK_MONOTONIC, is relatively expensive to call, and NYTProf calls it twice per statement. So it’s the dominant cost of the profiler.

Some systems, like Linux 2.6, offer other clocks, like CLOCK_MONOTONIC_FAST that returns the most recently cached value of the clock. That’s typically just 1/10th the cost of CLOCK_MONOTONIC, so your code runs much faster under the profiler.

Naturally there’s a trade-off: the accuracy of the timings drops significantly. Still far better than Devel::DProf, but far worse than normal. You’re dependent on how often the cached time value gets updated by your kernel. I saw a figure of “600 times per second” quoted for one system, but I can’t find the link now. My advice would be to steer clear of this feature unless you feel comfortable with the issues like these.

Enjoy!

An “Indian Summer” day of domesticity

Today was a great day.

An ordinary domestic weekend kind of a day, but blessed with great weather and no engagements for any of us.

A chance for me to catch up on many of the long neglected house and garden chores, and for our family to enjoy some chilled-out time around the house.

We’ve been burning more wood on the fire recently and the chimney was overdue a sweep. So I blocked up the fireplace with a bin bag and some foam, then climbed on the roof and swept down from the chimney pot. It was my first time sweeping a chimney and this method made it very simple. I just left the fireplace sealed up for a few hours for the dust to settle before opening it up and shovelling out the shoot along with the old ash.

My wife had seen a recipe for “Hedgerow Jelly” by Elisabeth Luard in a magazine, so she, and our two daughters, walked out down the local lanes to find the ingredients: 1kg crab apples or Bramleys; 250g blackberries, 250g sloes or bullaces or damsons, 250g rowan berries or elderberries, 125g hawthorn berries (haws), 125g rosehips (wild or cultivated), plus about 2 litres of water and about 2kg of granulated sugar.

(Our eldest daughter decided to take photographs of the process, which ultimately led to this blog post — I normally rarely post about home life.)

Meanwhile, after clearing gutters and sweeping the drive, I was working down by the lake front thinning out some trees. A few months ago we had a group of big old trees one corner of our garden cut down. Mostly pine with some silver birch and hazel. (You can see in the photo the large pile of wood I still need to chop, chop, chop.) That gave us a view of the lake again. Something we’d lost over the years since we moved in as the trees have grown up and bushed out.

Having got that narrow view of the lake I wanted to extend it through the trees to the right by thinning out their lower branches.

The summer weather in Ireland has been pretty poor the last few years. We seem to get a few lovely weeks in spring, then summer is a mostly washout with grey skies and regular showers. Then, just after the kids go back to school, we get a few bright weeks again—the Irish “Indian Summer”—around September/October. Fabulous days like today.

There’s a reason Ireland is so green. It rains. A lot. Especially in the west were we are. Despite the bright sunshine today the grass was squelching under my feet. The logs need to be stored or they’ll rot over the winter. I shifted a few wheelbarrow loads today and started clearing a space off the grass to store them.

A friend and I wielded axes a few weeks ago so we’ve a reasonable stockpile of split logs now, which I finished stacking today. I’ve been told the wood gets harder to split the longer its left, and I don’t fancy trying to split logs on dark wet winter nights, so I guess I need to keep at it.

Back indoors the domesticity continued with our eldest daughter making the topping for an Apple Crumble.

Our eating apple tree is still recovering from the pruning I gave it last winter (it’s first for many years) but our cooking apple tree seems very happy this year.

Yum!

That was the glossy edited version of the day. The uncut version includes me falling off the ladder into the lake, our youngest daughter having a tantrum and throwing handfuls of apple crumble onto the floor, and me pouring scalding hot Hedgerow Jelly over my hand. Ahh, domestic bliss!

NYTProf 2.04 gives you 90% smaller data files

At OSCON this year I gave a talk on my new pet project Devel::NYTProf v2 to a packed room. Turned out to be a lot of fun.

“The first thing I need to do is talk about Devel::DProf because it needs to be taken out and shot.”

I made a screencast of the 40 minute talk which you can watch on blip.tv here. Worth watching for the background on profilers, the demo of NYTProf, and the questions, not to mention the teasing I get along the way.

One of the final questions was about the size of the profile data file that NYTProf produces. One of the major drawbacks of statement-level profiling is the volume of data it generates while profiling your code. For every statement executed the profiler streams out the file id, the line number, and the time spent. For every statement! When trying to profile a full application doing real work the volume of data generated quickly becomes impractical to deal with. Multi-gigabyte files are common.

This was the major problem with Devel::SmallProf, which generated text files while profiling. Salvador Fandiño García addressed that in Devel::FastProf by writing the data in a compact binary form. A vast improvement that contributed to Devel::FastProf (on which Devel::NYTProf is based) being the first statement-level profiler worth using on large applications. Even so, the volume of data generated was still a problem when profiling all but short running applications.

NYTProf 2.03 was producing profile data at the rate of about 13MB per million statements executed. That might not sound too bad until you realise that on modern systems with cpu intensive code, perl can execute millions of statements every few seconds.

I could see a way to approximately halve the data volume by changing the format to optimize of the common case of consecutive statements being in the same file, but that wasn’t going to be enough. The best way forward would be to add zip compression. It would be easy enough to pipe the output stream through a separate zip process, but that approach has a problem: the zip process will be soaking up cpu time asynchronously from the app being profiled. That would affect the realtime measurements in an unpredictable way.

I realized back in June that a better approach would be to embed zip compression into NYTProf itself. Around the end of July Nicholas Clark, current Perl Pumpkin, got involved and was motivated to implement the internal zipping because he was “generating over 4Gb of profile data trying to profile the harness in the Perl 5 core running tests in parallel“.

He did a great job. The zlib library is automatically detected at build time and, if available, the code to dynamically route i/o through the zip library gets compiled in. The output stream starts in normal mode, so you can easily see and read the plain text headers in the data file, then switches to zip compression for the profile data. How well did it work out? This graph tells the story:

(The data relates to profiling perlcritic running on a portion of its own source code on my MacBook Pre 2GHz laptop. I only took one sample at each compression level so there may be some noise in the results.)

The data file size (red) plummets even at the lowest compression level. Also note the corresponding drop in system time (yellow) due to the reduction in context switches and file i/o.

I’ve set the default compression level to 6. I doubt you’ll want to change it, but you can by adding compression=N to the NYTPROF environment variable.

Here are the change notes for the 2.04 release:

Fixed rare divide-by-zero error in reporting code.

Fixed rare core dump in reporting code.

Fixed detection of #line directives to be more picky.

Fixed some compiler warnings thanks to Richard Foley.

Added on-the-fly ~90% zip compression thanks to Nicholas Clark.

Reduces data file size per million statements executed

from approx ~13MB to ~1MB (depends on code being profiled).

Added extra table of all subs sorted by inclusive time.

No longer warns about '/loader/0x800d8c/...' synthetic file

names perl assigns reading code from a CODE ref in @INC

Enjoy!

Arithmetic, Population, and Energy

Dr. Albert Bartlett is emeritus Professor of Physics at the University of Colorado at Boulder, USA. He has given this lecture on “Arithmetic, Population, and Energy” over 1,500 times.

“The greatest shortcoming of the human race is our inability to understand the exponential function.”

A challenging statement. Having seen the lecture now I can understand why it has been so popular, and is so important. I came across it recently and felt it was worth sharing.

You can watch it on YouTube here as a series of bite-size 9 minute clips.

(After that you might like this unrelated take on applying risk management.)

The Italian Perl Workshop

I spent a very pleasant few days in Pisa, Italy, last week. I’d been invited to speak at the Fourth Italian Perl Workshop. The workshop was a great success. In fact calling it a “workshop” is selling it short. It’s more of a mini-conference:

I spent a very pleasant few days in Pisa, Italy, last week. I’d been invited to speak at the Fourth Italian Perl Workshop. The workshop was a great success. In fact calling it a “workshop” is selling it short. It’s more of a mini-conference:

“2 days of conference, 2 simultaneous tracks, more than 30 talks, 120 attendees, 20 sponsors and many international guests”

The whole event ran very smoothly thanks to a great team lead by Gianni Ceccarelli, Francesco Nitido, and Enrico Sorcinelli. I’ll echo the compliments of one attendee “Complimenti sinceri agli organizzatori! Bravissimi! Tutto perfetto!”

I gave short talk on Gofer on the Thursday, and then two 40 minutes talks on Friday: Perl Myths, and Devel::NYTProf. I hope to upload screencasts and slides next week. The talks were all recorded on video so I imagine they’ll be uploaded at some point. I’ll add links here to them when they are.

The majority of the sessions were in Italian so, since my Italian is practically non-existant, I had plenty of time to work.

Or at least try to. The one disappointment of the trip for me was the apparent poor quality of the Italian internet. Using mtr I’d regularly see over 20% packet loss within telecomitalia.it and interbusiness.it from my hotel room. Occasionally over 50%. It got much better at night, so I’d do more work then. At the conference venue the Italian academic network (garr.net) also regularly had over 20% packet loss at its link to the internet. All this was, of course, outside the control of the organisers.

The “corridoor track” at perl conferences is always good. I had a chance to talk to Rafel Garcia-Suarez (and meet his lovely wife and new baby son), Matt S Trout, Hakim Cassimally, Michel Rodriguez, Marcus Ramberg, and many others.

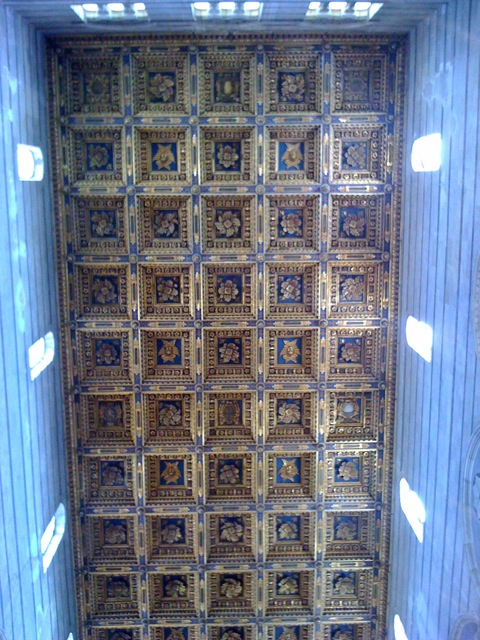

I had opted to take a very early fight so I’d have a day being a tourist in Pisa before the conference started. The weather was beautiful and I had a lovely time strolling though the streets of this ancient city.

I didn’t take my camera with me, but I did take my iPhone so I was able to capture a few snaps as I strolled around and climbed the tower. (Yes, it really does lean in a disconcerting “it must be about to fall down” way. All the more dramatic when you’re up close and can appreciate the massive scale of the tower.)